Scalability

Scaling Blockchains

Scaling Blockchains

By: Barnabé Monnot

"Fees are too high!" - Unknown Guardian

If you've spent any time in the crypto rabbit hole, you've likely heard that before. DeFi, NFT booms, bots... Blockspace is a hot commodity, running in short supply! Meanwhile, dreams of mass adoption, driven by non-financial applications such as DAOs, domain names or decentralized identity, won't be realized until sub-cent transactions are a reality. There is no other way but to scale.

To make sense of it all, we'll focus on the why and the how of scalability, the problem of augmenting capacity while preserving other properties such as decentralization, security or composability. We will look at the current solutions and observe how "Layer 1" protocols are moving towards a modular approach, with the novel idea of data availability layers. The field is moving at light-speed, and our aim here is to give you the tools to understand how to find your way around.

Scaling a monolith

Let's talk first about a naive way to scale a chain: doubling or 10x'ing whatever parameters currently governs chain capacity. We call this monolithic scaling approach naive because it is typically the first scaling solution invoked, and while it sometimes makes sense, the trade-offs are generally glossed over. Simply, greater capacity means the infrastructure supporting the chain also needs to scale up. The real question here is: can we do that safely?

Nodes run the chain

What do we mean by infrastructure? Who exactly supports the chain?

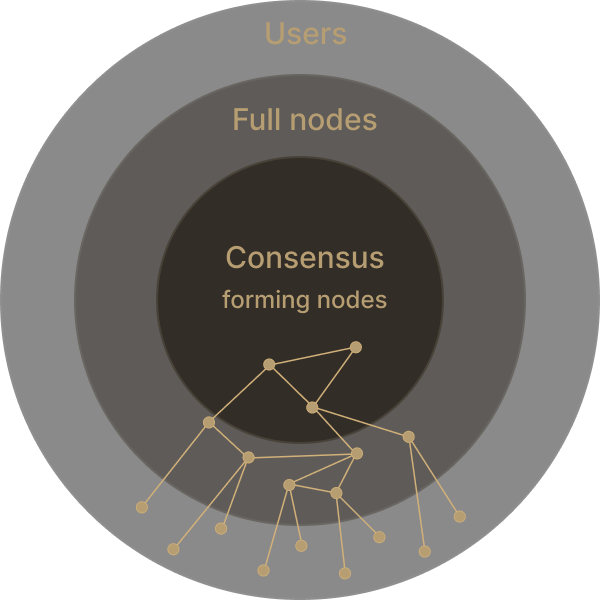

Full nodes are machines who peer with each other over a network to receive the latest blocks, which they validate to ensure that the block contents are legit. Consensus-forming nodes are those who participate in the consensus.

In Proof-of-Work-based consensus mechanisms, these nodes are called miners.

In Proof-of-Stake, they are known as validators or stakers.

Consensus-forming nodes periodically get to produce new blocks, which they pack with pending user transactions obtained from a transaction pool, so we will call them "block producers". Block producers receive incentives from the network, in the form of block rewards (newly minted coins) and transaction fees (paid for by users)

Concentric plot of nodes and users

On Bitcoin, the blocksize wars were all about increasing the size of a block above 1MB. By storing and processing all blocks of the Bitcoin blockchain, a full node is able to determine the current set of outstanding, spendable notes, called UTXOs. Bigger blocks means storing the chain takes more space, which means nodes must increase *storage* capacities to hold the entire history.

Bandwidth is also a concern, as bigger blocks disseminate slower over the peer-to-peer network connecting block producers. When a miner receives a new block extending the longest chain, they should reorient their hash power to mine on the new block. With bigger blocks, the probability that two miners find a new block before receiving each other's new block increases, creating a fork in the network and short-term instability.

On Ethereum, the parameter equivalent to Bitcoin's block size is the gas limit of a block. Ethereum smart contracts store variables and execute any program made up of elementary operations known as Ethereum Virtual Machine (EVM) instructions. To avoid non-terminating execution, like an infinite loop, compute and storage used during the execution are metered in gas.

The block gas limit is set so that the total gas used by all transactions in the block does not exceed a certain amount. Increasing the block gas limit means a block induces more compute or generates more content to be stored permanently by all full nodes. This imposes greater storage requirements, as well as potentially greater compute capabilities (faster CPUs) on full nodes tracking the chain.

Higher capacity = bigger nodes

Scaling a blockchain by simply increasing its parameters leads to greater hardware requirements, either in terms of compute, storage, or bandwidth. Often, an "ideal" hardware type is targeted by developers of a chain, e.g., "you should be able to keep up with the chain on a consumer Internet connection", or "the Ethereum full node storage requirement shouldn't be more than what a consumer laptop provides".

According to Moore's law, all resources get more affordable over time, as innovations in storage or compute happen. The "ideal hardware" follows, and gets better over time. Parameter increases are sensible, such as Ethereum steadily increasing the gas limit. Still, drastically increasing resource use will decrease the number of potential full nodes, as weaker hardware is ruled out.

Limits to monolithic scaling

If block producers are responsible for building the chain and are sufficiently decentralized, such that no single entity has a majority of the power, why do we need passive full nodes? Block producers are incentivized, capturing block rewards and transaction fees. Let them invest in great hardware!

Full nodes defend against consensus capture

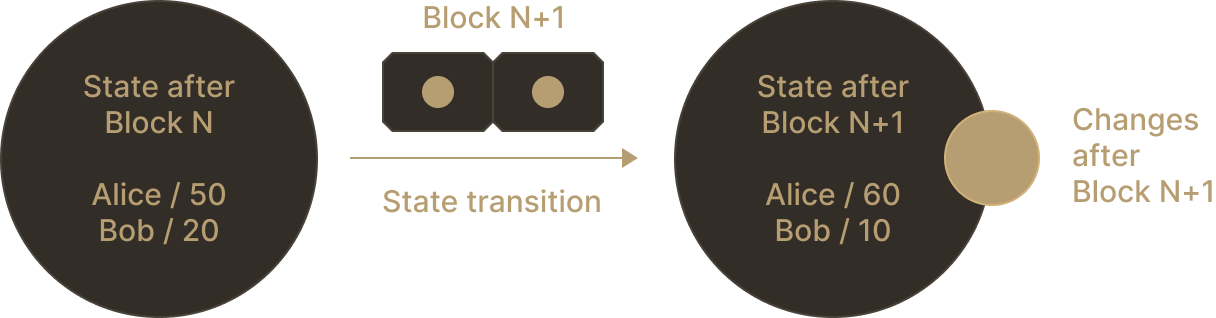

Blockchains are records of interactions. When you send 1 BTC to your friend, the Bitcoin blockchain records the payment by minting a new 1 BTC note your friend can use. When you send 1 ETH to your friend, the Ethereum blockchain records the payment by deducting 1 ETH from your account balance and increasing your friend's balance by 1 ETH.

Whether based on fungible notes as on the Bitcoin network or based on accounts as on the Ethereum network, the tip of the chain informs you of the current state: who owns which notes (in Bitcoin) or the current balances of everyone (in Ethereum). There is a state transition anytime a transaction is executed and leads to a change in the state.

Block producers are expected to make blocks containing only valid state transitions. If Alice the miner makes a new block minting herself 2,000 BTC, in flagrant violation of the consensus rules, other block producers are expected to reject Alice's block as invalid and move on. But what if Alice convinced a majority of block producers to let her mint the undue 2,000 BTC, by sharing the spoils with them? Block producers could keep building on the invalid state transition, making it part of the network's history.

In this scenario, full nodes provide a critical line of defense. Full nodes are able to catch Alice's invalid block and recognize that a majority of the consensus power has been captured. Full nodes are then able to coordinate off-chain to fork the network at the point where the invalid block is produced. By increasing resource use, as it decreases the number of passive full nodes, monolithic chains reduce the "herd immunity" effects provided by a healthy ecosystem of full nodes.

Monolithic economics

We'll end our discussion on monolithic scaling by observing that high-capacity chains, which promise low fees by vastly increasing the parameters governing chain scale, always seem to eventually run out of space. Let's clearly draw the link between "high capacity" and "low fees" first.

The scarcity of resources provided by the network (block producers and full nodes) directly produces the level of fees users experience. A common misconception is that transaction fees pay for the operation of the chain. In truth, fees usually arise as a result of congestion, a competition between users to obtain access to these scarce resources. In this model, increasing capacity would indeed decrease fee levels, as greater levels of demand are served, pushing down the market price of blockspace.

Assume for a moment that neither consensus capture, nor invalid state transitions, none of these were an issue. We may be perfectly comfortable with reputable block producers running excellent hardware to provide the maximum amount of capacity to their network. Let the users decide what they need!

High-capacity networks operating in this paradigm often promise low fees to attract their first users. Yet, so far, no such network that reached critical adoption numbers was able to maintain that promise. Both Polygon and Binance Smart Chain eventually adopted EIP-1559 to organize their fee markets. Solana updated to a more granular approach to price their resources. The clever engineering of these networks was often not enough to process the tremendous amounts of user demand.

This is not a capitulation, but perhaps the recognition that a different approach is necessary to sustainably address the problem of scaling open networks. Indeed, these networks are now considering solutions inspired by the new modular paradigm that we will present next.

Unbundling a full node: the modular paradigm

To summarize: full nodes backstop the ability of block producers to capture the chain for their own benefit. Pushing higher hardware requirements to follow the chain, while offering more capacity, decreases the opportunity for anyone to validate the work committed. Meanwhile, even with terrific hardware, it may be impossible to scale to levels necessary to address growing user demand. Can we break out of this paradigm?

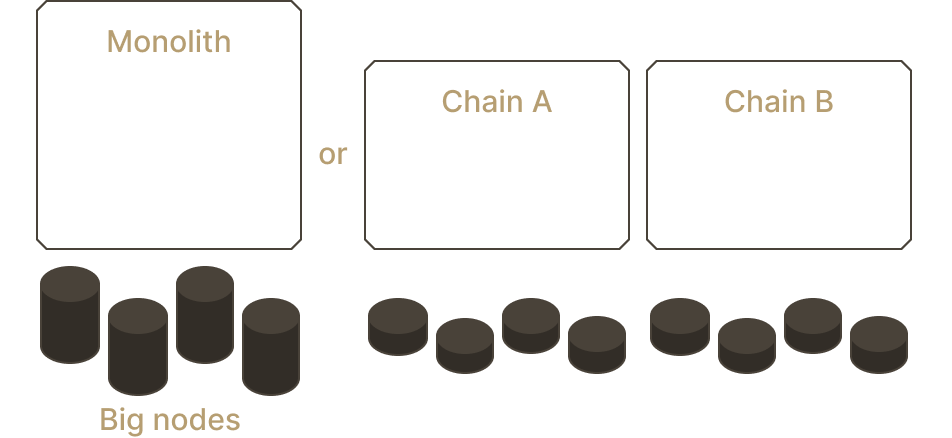

The first idea, historically, is to push operations "off-chain". Can't run everything on one single full node? No problem, just spin up a new chain. At first glance, this approach sounds very similar to monolithic scaling. Why would spinning up two equal-sized networks scale as well as a single doubly-sized network? The trick: instead of, say, 50 full nodes running a doubly-sized chain, we'll have two sets of 50 nodes each running a single-sized chain. Performing this trick means modularizing the execution layer of a node, splitting blockspace across multiple networks.

This is not a free lunch. Now full nodes of chain A don't have a clue what's happening on chain B. Say Bob mints a Mooncat NFT on chain Origin, and wants to swap it against tokens on chain Destination. To send the NFT to Destination, Bob must convince Destination that he really does own the NFT. Without fully validating Origin, Destination must rely on some mechanism to assert that the assets being moved indeed belong to their rightful owners. And here's our second hint of what a full node does: settling execution by verifying the state of computations.

Destination must interpret what happens on Origin, without being a full node to Origin, as this would otherwise destroy all scalability. But we need one more guarantee from Origin, which we would implicitly obtain from running a full node: data availability. Let's say Destination could perfectly verify the state of Origin, and correctly assert what belongs to whom. We still must ensure that the state is available to us! Meaning, we must have the guarantee that all data from Origin were published at some point. Without this guarantee, there may be no way for anyone to verify a claim from Bob that he owns the Mooncat, or to disprove a competing claim from an impostor.

Full nodes implicitly guarantee data availability, by ignoring missing or incomplete blocks. Your full node should ignore Alice's block if she did not publish the payload of transactions contained in the block. It should also ignore Alice's block if its parent is missing.

Essentially, we seek to avoid monolithic scaling by unbundling the core functions of a full node: settling execution based on available transaction data. Our campaign will explore the exciting design space enabled by the layered model of blockchain operations.

Conclusion

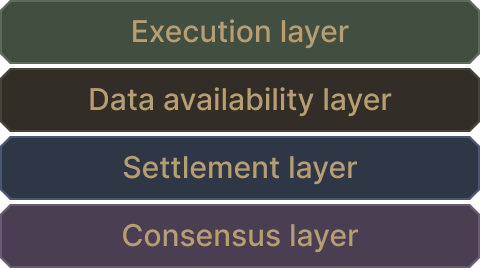

The modular approach splits full node functions along four layers. Full nodes execute user transactions. They verify that all data relative to execution is available, for anyone else to perform the execution as well. Settlement happens when the node convinces itself that execution was performed correctly. This campaign will not be concerned with the consensus layer, which provides nodes with a shared view of the data.

I don't know if I should, just gonna read one page...