Scalability

A Deep Dive into Rollups

A Deep Dive into Rollups

By: Barnabé Monnot

Off-chain execution solutions scale a network beyond the capacity of a single chain, with various trade-offs between scope, speed and security. For instance, fully programmable sidechains push speed limits further, but are responsible for their own security. Meanwhile, payment channels such as the Lightning network inherit the security of the blockchain they build upon.

In either case, the security of the scaling solution depends on where assets on the network settle. Because sidechains "settle on themselves", i.e., are their own arbiter of truth, the parent chain cannot convince itself independently that whatever took place on its sidechain is legit. Conversely, since payment channels eventually settle on the parent chain, the security of the base layer is extended to its secondary layer.

In the case of Lightning, settlement is possible on the Bitcoin network with cryptographic primitives. Concise proofs immediately convince Bitcoin full nodes about the state of the payment channel. Limited functionality is a feature here, as these concise proofs are easy to construct. However, these proofs are not so easy to build when a state is constructed through the execution of arbitrary computation on sidechains! How do we settle the state of generic off-chain computation?

There is a more subtle issue, data availability (DA).To close the channel and settle balances, Bob publishes to the Bitcoin network a commitment transaction he received from Alice. When Bob publishes an older transaction, Alice has some time to successfully challenge the settlement by publishing a fresher commitment from Bob. In other words, Alice must make the information that Bob signed a fresher commitment available to the network.

These two items, verifiable settlement and data availability, are critical to secure off-chain execution. The first effectively ensures that theft cannot be committed. The second provides certainty that any attempt to settle stale state can be successfully challenged.

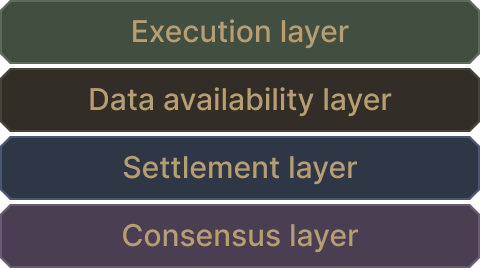

A full node of a monolithic chain performs all functions at the same time

Rollups are off-chain networks built to satisfy both properties. By their construction, rollups extend the base layer's perimeter of security to an off-chain, generic execution performed on the rollup. On every other level, rollups offer great flexibility, down to their method of settlement. Let's dig in.

Basics of rollups

To get a feel for how rollups work, we'll discuss in turn each rollup flavor, as determined by their settlement procedure: optimistic rollups and validity ("zk") rollups.

Optimistic rollups (ORs)

Timelocks in Lightning determine a challenge period during which any party may contest the settlement, e.g., by revealing a fresher commitment. Optimistic rollups follow a similar intuition.

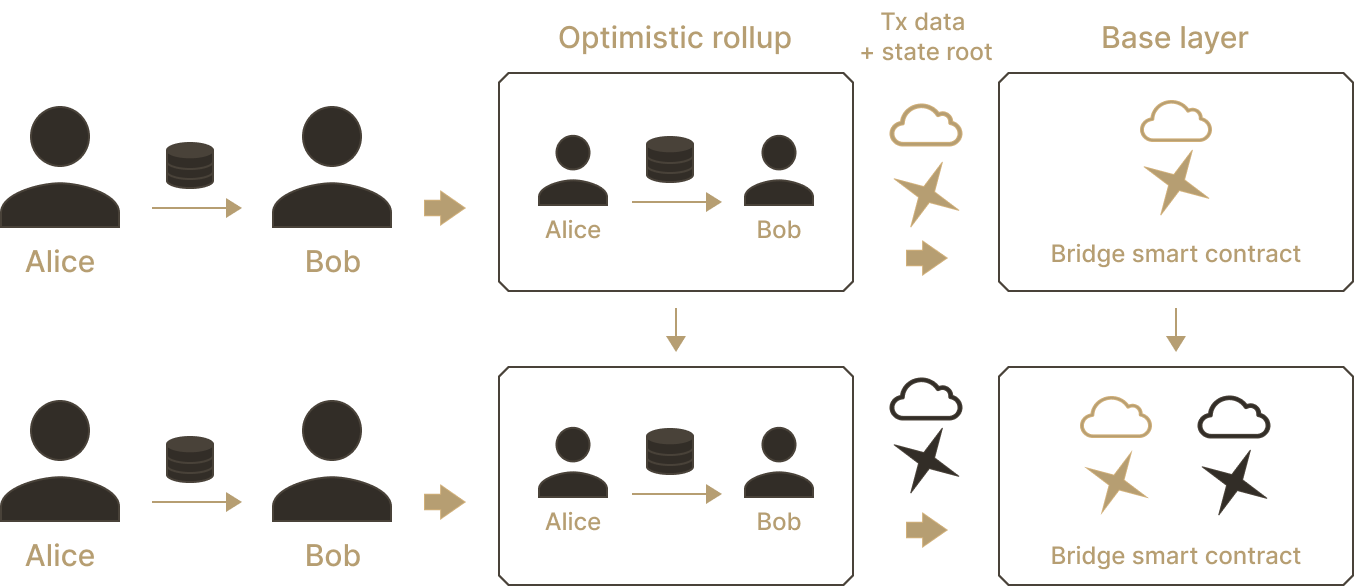

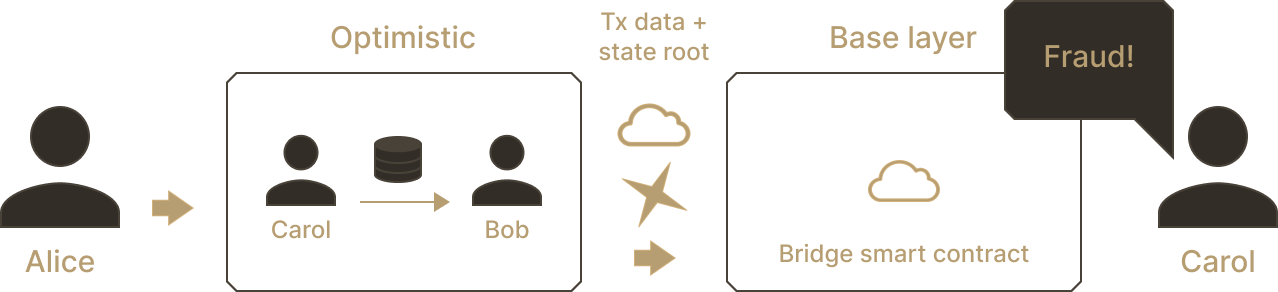

State roots of the rollup are periodically published to the base layer. The root succinctly encodes the current balances and variables stored on the rollup. Given the state, Carol can successfully prove that she owns her rollup assets. Conversely, when an invalid root is posted to the base layer, Alice may be able to prove that she owns Carol's assets instead! So how do we ensure that the base layer receives the correct root?

We allow anyone to challenge the latest received root, essentially claiming "the sequence of transactions executed on the rollup does not lead to the asserted state root." This challenge is known as a fraud proof. Seeing that Alice asserts an invalid root, Carol immediately challenges the claim.

This is where data availability kicks in. Alice may claim "I did a transaction that gives me control of Carol's assets!", which Carol cannot challenge without access to a record of the transaction. Data availability ensures that any executed transaction was published at least once, entering the public record.

Different constructions exist (more on this later), but in our current model, the base layer, acting as a judge, must decide who to trust, Alice the asserter or Carol the challenger. Like any good judge, the base layer requires to see some evidence. With optimistic rollups, all transactions executed on the rollup are posted as raw data to the base layer. When a challenge is raised, the base layer re-executes parts of the data, to make the decision.

In optimistic rollups, transactions executed in the rollup blocks are posted as raw data to the base layer, along with a state root committing to the rollup state.

To summarize, optimistic rollups settle after the end of a challenge window, typically set to a duration long enough for a fraud proof to be constructed and included on the base layer. Carol must wait until the end of the challenge window to retrieve assets she withdrew from the rollup on the base layer. For instant withdrawals, fast bridges exist, which provide users with assets from their inventory while they wait for the challenge window to close on the user's behalf.

When a committed state root does not match with the transactions executed on the rollup, anyone can submit a fraud proof to the base layer and challenge the state transition.

Validity ("zk") rollups (VRs)

With optimistic rollups, we assume state roots posted to the base layer are correct until proven otherwise. Data availability ensures a proof can always be constructed. Validity rollups instead bootstrap the power of cryptography to immediately provide a succinct proof of execution validity.

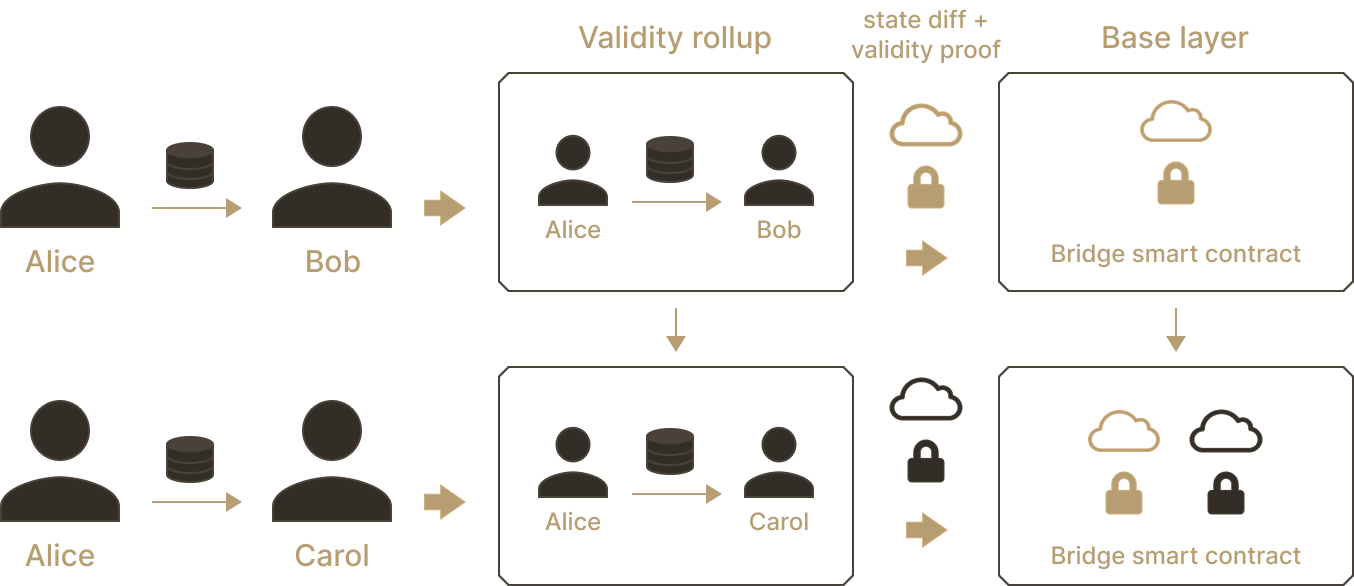

Suppose a rollup allows for payments between users. Alice sends Bob 10 UND, of which Bob sends 5 to Carol, which Carol immediately transfers to Alice. The validity rollup, instead of publishing all three transactions as data to the base layer, posts the state difference. After the three transactions, the state difference is this: Alice -5, Bob +5 and Carol +0.

Along with the state difference, the validity rollup publishes a cryptographic proof that the rollup executed transactions leading exactly to this state difference. This means anyone (including the base layer) can reconstruct the state of the validity rollup with the guarantee of being correct, by simply adding up state differences and verifying their corresponding proofs.

The magic of cryptography is that verifying the proofs is much much faster than re-executing the transactions themselves. A single actor is able to convince everyone else that they have performed the transactions as expected, without introducing invalid transitions.

The base layer is immediately convinced of the validity of rollup execution, thanks to the validity proof.

We still require data availability however, to ensure that all state differences are published to the public record at least once. A malicious Bob could perform a transaction modifying the state without publishing the state difference, while publishing a proof that he has correctly advanced the state. Everyone is able to convince themselves that Bob's transaction is legit, but no one knows what Bob did!

Why is this bad? Suppose Bob does a trade on an AMM, doesn't publish the trade, but moves the rollup state forward by publishing the validity proof of his trade. Effectively, Bob "bricks" the rollup, making it impossible for anyone else to move the state forward since balances have changed. In particular, it becomes impossible to perform withdrawals. As a result, Bob's unpublished transaction freezes all assets permanently on the rollup, which must then be manually reset.

Rollups and liveness

Rollups extend the security of the base layer, settling trustlessly with the help of data availability. In optimistic rollups, settlement is delayed to provide a challenge window, with DA ensuring the possibility of constructing challenges to invalid settlements. In validity rollups, settlement is immediate given a validity proof of execution, with DA ensuring all parties have access to the current state of the rollup.

Yet it is not enough to ensure correct execution of rollup transactions. In the world of distributed systems, engineers are concerned with both safety and liveness. Safety is our correctness property: a rollup can't misbehave and convince the base layer that an invalid state root is indeed valid. Meanwhile, liveness ensures that the system makes progress, that transactions are always eventually executed.

Liveness may be lost in two ways. Suppose the rollup only has one block producer, Bob, and Bob suddenly goes offline. These are computer systems after all! What if Bob loses the key allowing him to produce blocks for the rollup, or what if Bob's servers crash and no block is produced? This is a liveness issue. All user assets on the rollup are permanently stuck, since Bob alone can create blocks. As Bob is unresponsive, withdrawals can't be processed as well.

At the level of a single user, liveness may be lost in the case of censorship. While Bob cannot outright steal Alice's assets (our safety guarantee), Bob could prevent Alice's transactions from ever getting executed by never including her in his blocks: in particular blocking her from withdrawing and effectively freezing her assets.

To prevent liveness losses and guarantee censorship-resistance, rollups are meant to decentralize their set of block producers, called sequencers. When Bob censors Alice, she may be included by Carol, another rollup sequencer. When Bob is offline, the system rotates sequencers and gives Carol a chance to make a block herself, allowing users to advance the state of the rollup and support withdrawing.

Most rollups also feature a "forced inclusion" method at the base layer itself. Any user may call the bridge smart contract deployed on the base layer, to process their rollup transaction directly and advance the state. As long as the base layer is decentralized enough and doesn't censor Alice, she can force include her transaction. Of course, it is not ideal, as the base layer could be expensive to use, defeating the scalability benefits a rollup offers. But in catastrophic cases, forced inclusion offers a critical way out.

How rollups provide scale

Trustless settlement on the base layer, data availability, decentralized sequencers... With these items, rollups are able to extend the security of the base layer to their own execution while providing orders of magnitude more scale.

Who does what

At the base layer, too much transaction execution leads to bigger full nodes, leading to worse decentralization. On the other hand, guaranteeing DA is somewhat less taxing: it's a matter of getting the data from peers and storing it without any kind of processing.

Full nodes check one another to ensure the data is published widely at least once. Past a certain point, it is not even necessary for a full node to keep the data around. Data indexers, the likes of which power block explorers, will collect and store historical data. What matters is that everybody agrees the data was fully available to anyone once.

On a rollup, resource constraints are flipped: rollup full nodes can be as large as necessary and provide significantly more blockspace than the base layer. Yes, this means rollup full nodes are far fewer than base layer full nodes. But with fraud or validity proof-based settlement and DA, security is preserved. Big nodes means execution is cheap and plentiful.

However, rollups must post their data somewhere and pay for this service, which can get expensive. Providing more DA at the base layer lowers this cost dramatically, but it remains non-trivial. Ethereum expects to eventually provide over 1.3 MB/s bandwidth for DA, an amount far in excess of current demand.

Rollup costs

The promise of rollups, beyond serving greater user demand, is to reduce the costs for transacting on their network. It is worth spending time looking at the mechanisms that govern rollup pricing.

On the base layer, block rewards and/or user fees compensate block producers for their service, namely, running a full node and participating in the consensus. On a rollup, it is also necessary to compensate sequencers for full node operations incurring real world expenses, either paying them with newly-minted tokens (when the rollup has its own token) or from user fees directly.

Sequencers must also pay for DA which regards publishing their rollup data (transaction data and state roots for ORs, state differences and validity proofs for VRs) to the DA layer. The cost is typically passed on to rollup users.

Finally, while rollups offer high-powered execution environments, rollup blocks could also become full! In this case, congestion pricing mechanisms such as EIP-1559 could be used to "jump the queue" and express user time preferences. The rollup dynamically adjusts an entry price to equalize its supply of blockspace with the demand for it.

Rollup variations

So far, we've emphasized a rollup model where the secondary chain posts all data to and settles on the base layer. On Ethereum, the base layer provides a limited amount of blockspace supporting general execution of any smart contract. When the rollup uses the base layer for data availability and settlement, trustless two-way bridges allow users to move assets between the base layer and the rollup. The bridges are deployed as pairs of contracts, one on the base layer validating the rollup's execution (with fraud or validity proofs), and one on the rollup itself, processing deposits and withdrawals.

This is not the only model. We'll discuss here constructions decoupling even further the data availability and settlement layers.

Sovereign rollups

Celestia, a base layer protocol, suggests getting rid of the execution layer currently present on the Ethereum base layer. Rollup full nodes must also be full nodes to their base layer to listen in on bridge events such as deposits or proofs. Yet all activity on the base layer does not necessarily concern the rollup itself. Why would your rollup care that a Cryptokitty sale happened on the base layer?

An Ethereum full node provides consensus over blocks and transactions, ensuring validity of all blocks in the canonical chain. It settles its rollups on its execution layer, where smart contracts live. It also provides a DA layer for its rollups.

In comparison, the Celestia base layer provides a minimal feature set. Raw rollup data is posted to the Celestia base layer, with Celestia validators coming to consensus over which data is canonical and which order the data appears, but not whether the data is valid or not. In other words, a rollup on Celestia publishes data to the Celestia base layer, and the rollup itself is solely responsible for determining which data is relevant for running its operations.

On Ethereum, data validity is settled at the base layer, by the rollup bridge smart contract living on Ethereum's execution layer. On Celestia, the rollup "settles on itself": sequencers of the rollup rely on fraud or validity proofs gossiped amongst one another to ascertain the validity of the data they read from the Celestia base layer. This approach is known as sovereign rollups, as opposed to Ethereum smart-contract rollups.

There are pros and cons to either approach.

Sovereign rollups are not tied to settle on an EVM-based execution layer, giving them flexibility to innovate with fraud or validity proofs.

Smart contract rollups may want to upgrade themselves periodically, yet the ability to upgrade the smart contract bridge opens the rollup to theft if a malicious upgrader rewrites the bridge contract to unlock all assets and transfer them to the attacker. An upgrade of a sovereign rollup looks more like a hard fork, where the social layer is able to backstop such attacks.

By sharing a settlement layer, e.g., Ethereum, smart contract rollups may be able to pool liquidity on the base layer and bridge to this "hub". An emerging design based on Celestia builds a "settlement rollup" as a sovereign rollup, with many sovereign rollups bridging to this "hub" settlement rollup 🤯

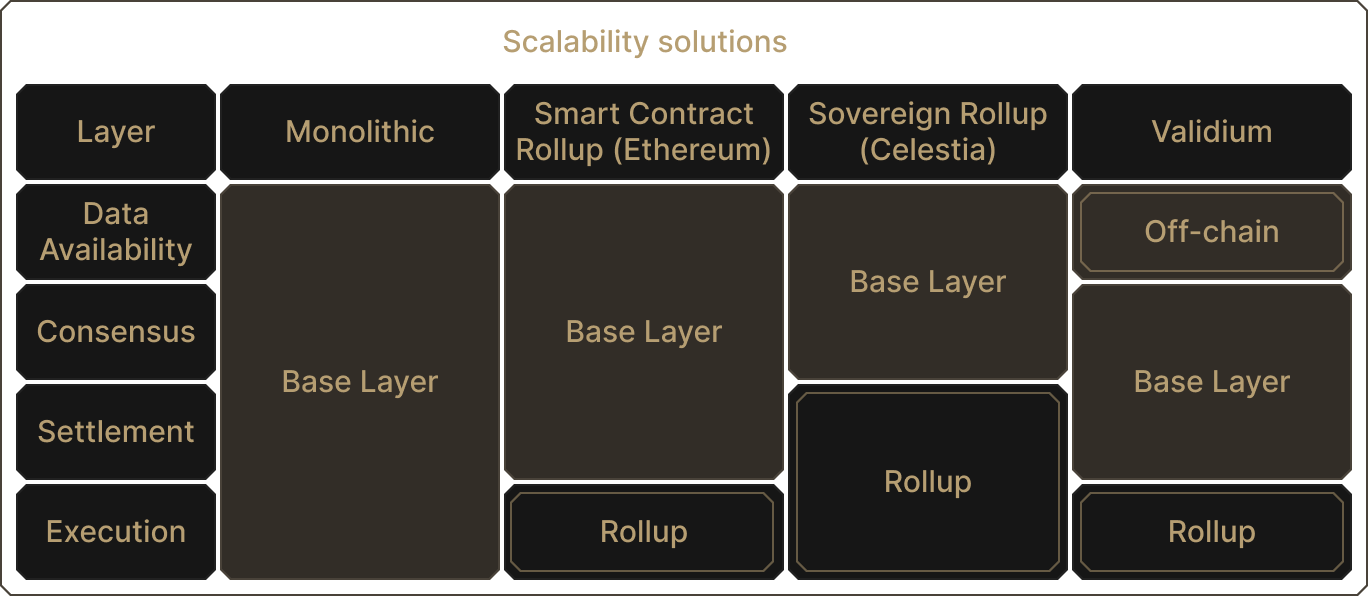

The gist here is that by unbundling the functions of a full node (consensus, settlement, data availability, execution), myriad designs open up, each of which emphasizes a different trade-off tailored to its needs.

Publishing to other DA layers

Today, Ethereum DA is expensive, and while future upgrades plan to reduce costs, secondary layers did not wait to find workarounds.

The simplest idea is to publish their data elsewhere. For instance, instead of putting the data on Ethereum, a rollup could claim "Trust me, I'll keep the data off-chain for you". Such constructions have taken off for validity proof-based scaling, and are known as validiums. The validium posts validity proofs of execution to the Ethereum base layer, but keeps the data off-chain. As we've learned above, without DA, user funds may be frozen, even if validity proofs protect against outright theft. There is an element of trust here, but not publishing the data allows validiums to offer transactions at very low costs.

Data availability committees (DACs) are a popular alternative. Basically, a multi-sig signs off on the availability of data, and commits to providing it on-demand. This provides redundancy to the validium design instead of trusting a single party, at the minor cost of more overhead (publishing signatures from members of the multi-sig).

Celestiums are rollups which publish their data to the Celestia DA layer, while settling on Ethereum. Like the much-maligned multi-sig bridges moving to more trustless designs, Celestiums allow rollups to move towards guarantees provided by the Celestia validators, a permissionless and decentralized set of maintainers. Misbehavior, such as data unavailability, is punished on the Celestia chain with slashings, reinforcing cryptoeconomic security.

Unbundling core full node functions allows for modular designs. Adapted from P. Watts

Conclusion

Once the core functions of a full node are unbundled, each layer specializes in what it does best, pushing scalability limits upwards for the whole system. New designs explore the possibilities offered by data availability and settlement layers, to secure off-chain computation and bridge networks trustlessly.

It's too early to tell which permutations will eventually solidify and attract the most users. A combination of product-market fit and technical innovation will decide what the scalability landscape will look like a few years from now. In the meantime, clarity on the designs and their trade-offs is critical to interpret the many claims made by projects in this space.

Now, let's have a look at that spell...